Embedded vision framework and camera demonstrator

For customers planning to build an embedded vision system we offer a framwork and the infrastructure such a system can be based on.

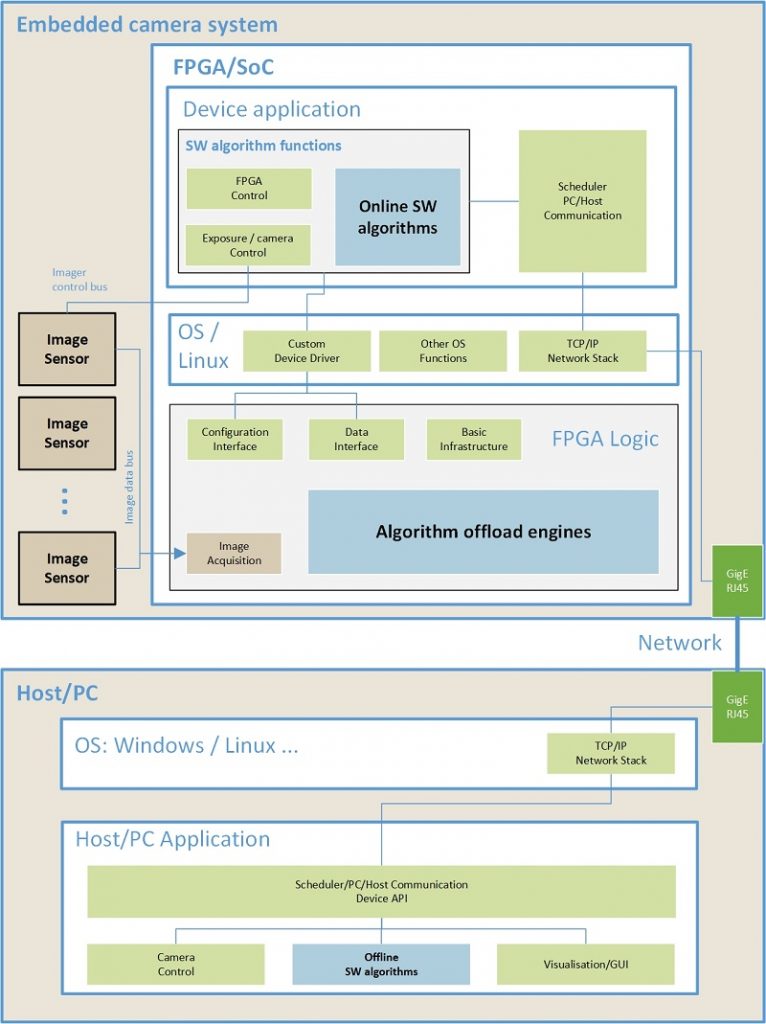

A diagram of the functional system components is shown in the following figure. A fully featured Linux operating system is running on the demonstrator camera. On top of this, the device application runs several software tasks necessary to operate the camera. The FPGA functionality and the algorithm accelerators implemented in the FPGA logic are accessable for the software through a custom device driver. All communication between the device application and the underlying hardware is tunnelled through the device driver.

To access and potentially display the camera data on a PC a low level API has been implemented to communicate over the network. This API can be used to get access to the camera data from customer applications.The software algorithms performing image processing and control tasks can either run on the device or on a host PC. All algorithms and the user functions run inside functors with a common interface. Therefore it is possible to migrate the functionality between the device and the host PC only by changing the configuration and not the functors themself. This makes debugging of user functions much easier, they can mostly be developed on the host PC.

Image processing and data types are based on OpenCV Version 3, so all OpenCV functionality is available for application development either on the device and on the host PC.

Procesing pipeline and IP

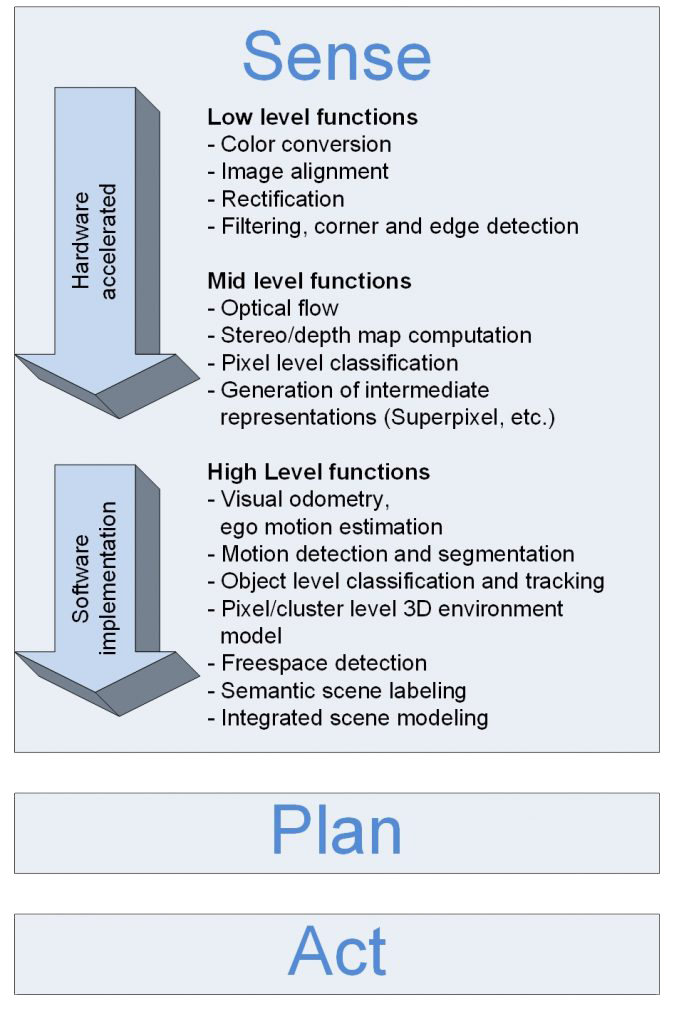

Based on the paradigm of robot operation “Sense-Plan-Act”, our view of the processing pipeline for the “Sense” stage is shown in the following image. Of course, for the high-level functions and in a smaller extend also for the low- and mid-level algorithms, the choice of the functionality differs based on the application and choices for the implementation.

The processing pipeline is split into functionality implemented in hardware or software. This split is based on computing power requirements, but also somehow reflects the separation of the functions in low-, mid- and high-level functions.

Low-and some mid-level functions mostly operate on pixel level and have to be executed at frame-rate. Therefore they require a high amount of processing power. They also inhibit a very regular structure. This combined make them the ideal candidates for hardware implementation.

For the hardware accellerated algorithms, our focus is on the low- and mid-level functionality. We believe that our choice of algorithms as shown in the above diagram can be used for many applications.